Arts in eXtended Reality (XR)

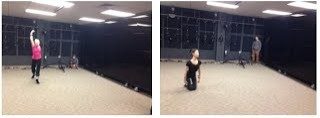

Fluid Echoes is a research and performance project investigating how deep neural networks can learn to interpret dance through human joints, gestures, and movement qualities. This new technology will be immediately applied to allowing dancers to generate and perform choreography remotely as necessitated by the COVID-19 quarantine. Researchers collaborating from their homes across the visual arts (Zach Duer), performing arts (Scotty Hardwig), and engineering (Myounghoon Jeon) at Virginia Tech are working in partnership with the San Francisco-based dance company LEVYdance to build this new platform, funded by the Virginia Tech Institute for Creativity Arts and Technology (ICAT) COVID-19 Rapid Response Grant and LEVYdance.

Virtual reality (VR) is an emerging technology of artistic expression that enables live, immersive aesthetics for interactive media. However, VR-based interactive media are often consumed in a solitary set-up and cannot be shared in social settings. Having a VR-headset for every bystander and synchronizing headsets can be costly and cumbersome. In practice, a secondary screen is provided to bystanders and shows what the VR user is seeing. However, the bystanders cannot have a holistic view this way. To engage the bystanders in the VR-based interactive media, we propose a technique with which the bystanders can see the VR headset user and their experience from a third person perspective. We have developed a physical apparatus for the bystanders to see the VR environment through a tablet screen. We use the motion tracking system to create a virtual camera in VR and map the apparatus’ physical location to the location of the virtual camera. The bystanders can use the apparatus like a camera viewfinder to freely move and see the virtual world through and control their viewpoint as active spectators. We hypothesize that this form of third person view will improve the bystanders’ engagement and immersiveness. Also, we anticipate that the audience members’ control over their POV will enhance their agency in their viewing experience. We plan to test our hypotheses through user studies to confirm if our approach improves the bystanders’ experience. This project is conducted in collaboration with Dr. Sangwon Lee in CS and Zach Duer in Visual Arts, supported by the Institute for Creativity, Arts, and Technology (ICAT) and National Science Foundation (NSF).

Augmented Reality applications have mostly considered the visual modality, and visual augmentations to enhance users’ reality. Other modalities, such as audio or hearing, have largely been ignored in terms of Augmented Reality applications. Nonetheless, there are multiple instances where auditory displays have been described in ways to enhance or augment users’ perception of their environment. To support the development of Augmented Reality applications in the auditory modality, this project sough to provide a concrete definition and taxonomy for the concept of Audio Augmented Reality. Through a purely qualitative study following the methodology of Grounded Theory, this project generated qualitative data on the concept of Augmented Reality, and auditory displays through conference workshops, expert interviews, and focus groups. The findings provide a definition and taxonomy for AAR that is grounded in data.

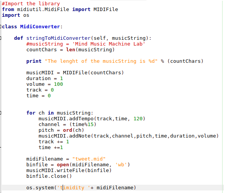

In this new AAR framework, we tie together the beauty in visual art and music by developing a sonification system that maps image characteristics (e.g., salient regions) and semantics (e.g., art style, historical period, feeling evoked) to relevant sound structures. The goal of this project is to be able to produce music that is not only unique and high-quality, but is also fitting to the artistic pieces from which the data were mapped from. To this end, we have interviewed experts from the fields of music, sonification, and visual arts and extracted key factors to make appropriate mappings. We plan to conduct successive empirical experiments with various design alternatives. A combination of JythonMusic, Python and machine learning algorithms has been used to develop the sonification algorithms.

* This project has been supported by the VT Institute for Creativity, Arts, and Technology (ICAT).

Sonification translates emotional data into sound, allowing us to interpret emotional information. However, the effectiveness of sonification varies, depending on its application. The main purpose of the current study is to investigate how the emotion-reflecting sonification vs. the emotion-mitigating sonification influences the experience of listening to fairy tales. We analyzed three fairy tales using sentiment analysis and created two types of sonification: one that reflects the representative emotion of each paragraph (emotion-reflecting sonification), and another that reflects the opposite emotion of the paragraph (emotion-mitigating sonification). For the story-telling agent, we used a humanoid robot, NAO and designed three voices with Azure. We conducted a focus group study to assess the impact of emotion-reflecting sonification, emotion-mitigating sonification, and no sonification on participants’ listening experiences during the robot's storytelling of fairy tales. The results showed that participants' ratings of the “pleasing”, “empathy”, and “immersiveness” categories were higher in emotion-mitigating sonification than in emotion-reflecting sonification. Moreover, participants showed a preference for male voice than female or child voices.

In this project, we worked with a professional dancer to augment her performance with technology. We used a respiratory sensor and a motion tracking system to modify the imagery and sound according to the dancer's breathing patterns and location throughout the performance.

We are making two versions of this performance: one where the dancer's breathing and location data are mapped to visuals and sound by a human technologist, and one where these data are mapped with AI in the loop. By doing this, we are offloading some of the creative work of the human technologist onto the AI. We will run each of the two performances twice; we will either tell audience members how the technology was designed before or after they have watched the performance and taken a survey on their perception of the performance. By designing this study, we seek to understand whether biases are present in how people respond and value creative works made by AI.

Immersive 360-degree virtual reality (VR) displays enhance art experiences but can isolate users from their surroundings and other people, reducing social interaction in public spaces like galleries and museums. In this project, we explored the application of a cross-device mixed reality (MR) platform to enhance social and inclusive art experiences with VR technology. Our concept of interest features co-located audiences of head-mounted display and mobile device users who interact across physical and virtual worlds. We collaborated with an artist to develop an interactive MR artwork, conducted focus groups and expert interviews to identify potential scenarios, and created a taxonomy. Our exploration presents a prospective direction for future VR/MR aesthetic content, especially for public events and exhibitions targeting crowd audiences.

* This project has been supported by National Science Foundation (NSF).

For this project we built a collaborative crochet music maker that detects when two individuals are synchronized in their crocheting. We first segmented the physical procedure for making the simplest stitch in crochet, the single crochet. All other stitches in crochet are built from the single crochet. After we segmented this procedure into three parts, we trained a dynamic time warping model to classify live hand motion data. We also built a small piece of code that compares the live classification results of two users and determines whether they are synchronized. When the users are synchronized, a chord an octave below the last note that was made from the leading user, whose data is continuously sonified, is played.

After building this system, we ran a participatory design study with experienced and novice crocheters, as well as sonification, HCI, and audio technologists to improve the system and gather insights into designing user interfaces for users with different levels of embodied knowledge of specific tasks.

For this project we aim to understand the transferability of skills used to describe algorithms in different domains. We are specifically interested in how pairing gestures with the components of a written algorithmic language found in art (crochet) might impact individuals’ ability to recall the elements of that language, and whether there is a relationship to their ability to decode other algorithmic languages (in computing) that they aren’t immediately familiar with. The goal of the study is to inform STEM education by demonstrating how fine motor gestures can support learners in building and describing abstract concepts and widening channels to promote and communicate STEM concepts.

We hypothesize that participants who learn the corresponding gestures with the written crochet language will perform better on tests on the written crochet language than those who learn the written crochet language without gestures. We also believe that participants who learn the corresponding gestures with the written crochet language will be better equipped to understand other written algorithmic languages and will therefore perform better on a test of a computer-based algorithmic language than those who learned the algorithmic language of crochet without gestures. Finally, we believe that individuals who score highly on the crochet language test will score highly on the computer language test, regardless of whether they've learned the corresponding crochet gestures.

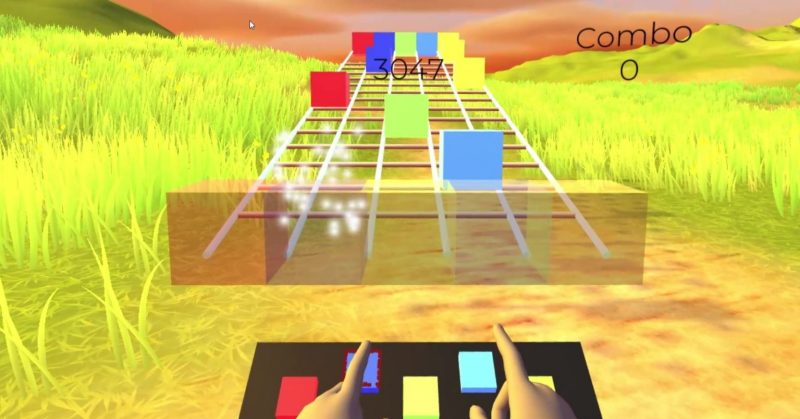

Providing an immersive experience to the virtual reality (VR) user has been a long-cherished wish for HCI researchers and developers for decades. Delivering haptic feedback in virtual environments (VEs) is one approach to provide engaging and immersive experiences. In addition to haptic feedback, interaction methods are another aspect that impacts on user experience in the VE. Currently, controllers are the primary interaction method in most VR applications, by indirectly manipulating virtually rendered hands in VR with buttons or triggers. However, hand tracking technology and head mounted displays (HMDs) with a built-in camera enable gesture-based interactions to be a main method in VR applications these days. Hand tracking-based interaction provides a natural and intuitive experience, consistency with real world interactions, and freedom from burdensome hardware, resulting in a more immersive user experience. In this project, we explored the effects of interaction methods and vibrotactile feedback on the user’s experience in a VR game. We investigated the sense of presence, engagement, usability, and objective task performance under three different conditions: (1) VR controllers, (2) hand tracking without vibrotactile feedback, and (3) hand tracking with vibrotactile feedback. The objective of the game is to obtain the highest score by hitting the buttons as accurately as possible with the music. We also developed a device that delivers vibrotactile feedback at the user’s fingertips while hands are tracked by a built-in camera on a VR headset. We observed that hand tracking enhanced the user’s sense of presence, engagement, usability, and task performance. Further, vibrotactile feedback improved the levels of presence and engagement more clearly.

* This project has been supported by the VT Institute for Creativity, Arts, and Technology (ICAT).

In this project, we investigate the effect of interaction methods and vibrotactile feedback on the user's sense of social presence, presence, engagement, and objective performance in a multiplayer cooperative VR game. In order to do sculpturing, participants will need to manipulate the simple 3D objects such as a cube, a sphere, or a cylinder. By combining and manipulating the simple 3D objects, players will be able to create completely new artifacts. Along with the gameplay, we developed the fingertip vibrotactile feedback device that provides haptic feedback while players are using hand tracking.

* This project has been supported by the VT Institute for Creativity, Arts, and Technology (ICAT).

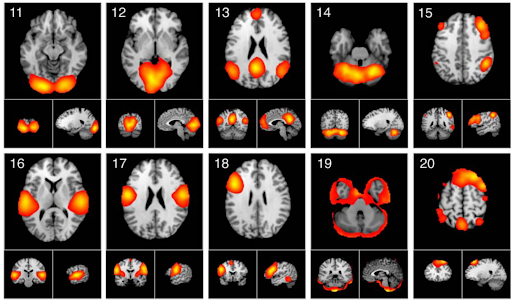

In this project, we seek to sonify functional Magnetic Resonance Imaging (fMRI) brain-imaging readings from neurotypical individuals and individuals with schizophrenia and Autism Spectrum Disorder (ASD) to exhibit differences in brain activity and raise awareness of these neurological conditions. In order to do so, music samples from various genres are modulated based on fMRI readings and can reflect the contrast between the fMRI data from neurotypical individuals with those from people with diverse neurological conditions. By presenting these sonified samples, we will promote the understanding of various neurological conditions as well as the sonification method in general.

fMRI Activation Image

While virtual reality (VR) is a powerful tool to enhance science learning in informal settings, head-mounted displays may not be suitable for all with potential safety risks, especially for a group of users in confined spaces. In this project, we used handheld mobile devices as a safer, collaborative alternative, offering an immersive 3D learning environment. An interdisciplinary research team at Virginia Tech was formed with faculty and students from various departments, including engineering, education, and visual arts, to contribute their expertise in platform development, content creation, design, and execution. The project partnered with local elementary schools and the science museum to showcase the content as a permanent educational asset for the community.

* This project has been supported by National Science Foundation (NSF).

In this project, our goal is to investigate how incorporating sound into the representation of planetary features can enhance user presence, motivation, and social interaction in a handheld virtual reality (VR) environment. We are particularly interested in understanding how different auditory displays can enhance the immersive experience and engagement of users in an informal educational setting. Additionally, we aim to explore the trade-off between providing users with control over the sound settings and the overall effectiveness and enjoyment of the auditory display. Currently, we are in the process of implementing VR scenes to conduct user studies to assess our approach and gain insights on integrating sound in handheld VR applications.

* This project has been supported by National Science Foundation (NSF).

While applications of handheld-based virtual reality have the potential to accommodate large groups, there is a lack of understanding regarding how group sizes and interaction methods affect user experience. In this project, we developed a handheld-based VR game for co-located groups of varying sizes (2, 4, and 8 users) and tested two interaction methods (proximity-based and pointing-based).

* This project has been supported by National Science Foundation (NSF).

Handheld devices offer an accessible alternative to head-mounted displays in virtual reality (VR). However, we lack insight into their performance in larger-scale environments. In this project, we conducted two user studies, examining three translation techniques (3DSlide, VirtualGrasp, and Joystick) in room-scale VR settings. We assessed usability and performance, considering factors like target size, distance, and user mobility. Results showed that the Joystick technique, which translates objects based on the user's perspective, was the fastest and most preferred, though precision was similar across techniques. These findings help inform the design of room-scale handheld VR systems and mixed reality applications.

* This project has been supported by National Science Foundation (NSF).

Toy consumption has become a significant global issue concerning environmental justice and climate change, with 80% of toys ultimately discarded despite their potential for reuse, contributing to increased landfill waste and incineration. This project addresses the research question: "How can we encourage children and parents to make environmentally beneficial decisions regarding end-of-life (EoL) toys to promote reuse behavior?" We plan to use a participatory research approach, involving local children and parents as key contributors in developing a new digital experience for EoL toys. This initiative aims to facilitate a gradual detachment from EoL toys and promote sharing practices within a circular economy framework.

* This project has been supported by the VT Institute for Creativity, Arts, and Technology (ICAT).