Assistive Robots & AI

There is a growing trend of incorporating robots into larger mixed human-robot teams. As this progresses, we are shifting from simple dyadic interactions to more complex multi-robot scenarios. This study explored how robots influence human decision-making within these teams, focusing on how varying levels of robot agreeableness impact human perceptions and choices. Using the Desert Survival Situation, a controlled decision-making task, participants students participated in both dyadic and triadic interactions. By comparing these configurations, the study sheds light on how human-robot collaboration evolves with multiple robots, providing insights into how team composition and robot behavior shape effective decision-making in future multi-robot environments.

With the rapid advancement of robotics and AI, educating the next generation on ethical coexistence with these technologies is crucial. This project developed a child-robot theater afterschool program to introduce and discuss robot and AI ethics with elementary school children. The program blended STEM (Science, Technology, Engineering and Mathematics) with the arts to explore key ethical issues such as bias, transparency, privacy, usage, and responsibility. Using interactive scenarios and a theatrical performance, the program encouraged children to engage with ethical dilemmas in robotics and AI in a creative and accessible way. This project demonstrates an innovative approach to early education in ethics, preparing children for a future shaped by advanced technology.

Here's the project webpage: https://sites.google.com/view/child-robottheater/home?authuser=0

* This project has been supported by the National Science Foundation (NSF), VT Institute for Creativity, Arts, and Technology (ICAT), and VT Center for Human-Computer Interaction (CHCI).

Robot Musical Theater Afterschool Program at Eastern Montgomery Elementary School.

This study aims to investigate the impact of human emotions (human-related factors), robot reliability (robot-related factors), and team hierarchy (environment-related factors) in HRI, focusing on trust along with other measures, such as user perceptions and task performance, in an escape room setting. The goal of the study is to provide valuable design and theoretical recommendations for future social robot systems adapting to the impact of human emotions.

In this three-pronged interdisciplinary project, our lab was tasked with developing materials to ascertain how autistic STEM university students think about using AI in a comprehensive mentorship program to prepare them for the workforce. We developed a survey which includes questions on participants' previous mentorship or internship experiences, aspects of joining the workforce that they think they could use help on, and how they currently use software like AI and social media. We also created a co-design interview where we give autistic participants template user interfaces and the tools to ideate, draw, and alter common and new UI features using AI. These templates include UIs for communication, productivity, interview practice, and networking. Before we present the template UIs, we prompt the participant with relevant questions to get them brainstorming.

* This project has been supported by The ORAU-Directed Research and Development (ODRD).

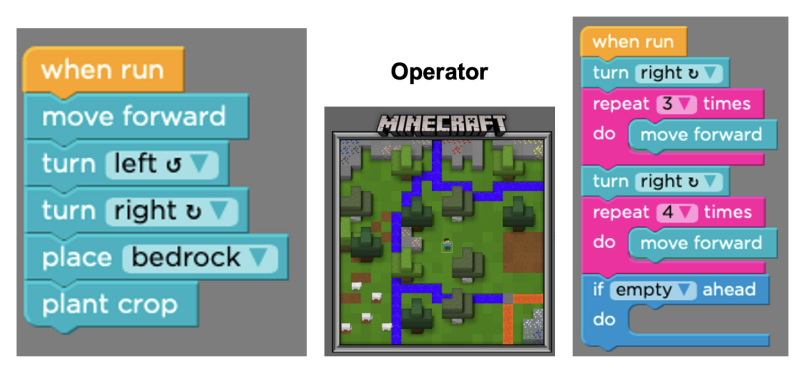

The Smart Exercise application is an Android application paired with a wearable Bluetooth IMU sensor that is designed to provide real-time auditory and visual feedback on users’ body motion, while simultaneously collecting kinematic data on their performance. The application aims to help users improve physical and cognitive function, improve users’ motivation to exercise, and to give researchers and physical therapists access to accurate data on their participants’ or patients’ performance and completion of their exercises without the need for expensive additional hardware.

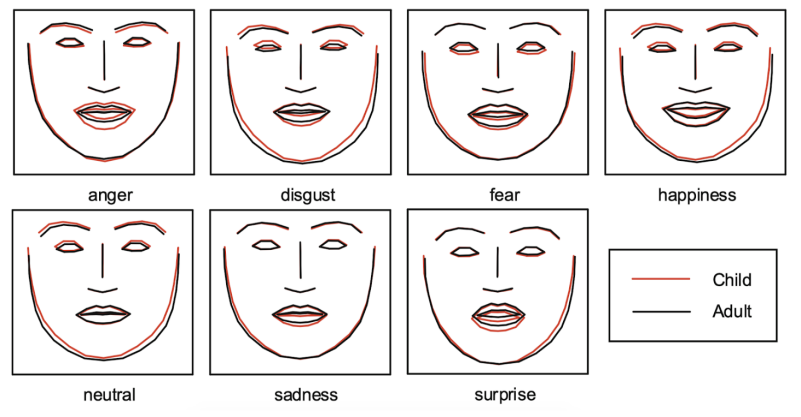

Research on facial expressions and their effects on human-robot interaction has had mixed results. To unpack this issue further, we investigated users’ emotion recognition accuracy and perceptions when interacting with a social robot that displayed emotional facial expressions or not in a storytelling setting. The results from the present study indicated the importance of facial expressions when considering design choices in social robots. The Northrop Grumman Undergraduate Research Award sponsored this study.

This project focuses on developing smart industrial robots that offer personalized support for autistic employees in STEM and manufacturing jobs. Our approach combines the co-design framework of mutual shaping with the principles of Self-Determination Theory (SDT). We will engage key stakeholders, including autistic adults and industry experts, throughout all development cycles in an iterative design process to advance industrial robot intelligence.

The primary objectives of this project are twofold: (1) to co-create support approaches based on SDT that address fundamental psychological needs (i.e., autonomy, competence, and relatedness) through interviews, focus groups, and human-in-the-loop simulations, and (2) to enhance robot intelligence for accurately identifying and meeting workers' psychological needs in manufacturing settings, resulting in adaptive and personalized support. By integrating SDT-based support into industrial robot design, we anticipate increased motivation, work quality, and job satisfaction for all employees. This neuro-affirming work environment will, in turn, promote inclusion, productivity, and innovation in the STEM workforce.

* This project has been supported by National Science Foundation (NSF): Div. of Equity for Excellence in STEM.

The primary purpose of this research project is to emerge collaboration patterns among neurotypical and neurodiverse individuals when performing a task in both in-person and remote settings. Specifically, we are interested in how neurotypical adults and autistic adults collaborate with and within each other. We plan to collect data from various sensors (e.g., cameras, heart rate monitors) and utilize them to establish cognitive, emotional, and engagement states of individuals during collaborative tasks and to eventually model collaborative behaviors. Essentially, outcomes of this research can contribute to the workplace design that provides individualized support to enhance effective and delighted collaboration among neurodiverse team settings. Collaborated with Virginia Tech Autism Clinic & Center for Autism Research and the Department Human Development and Family Science, this research project has been supported by the NIH R03 Grant Program.

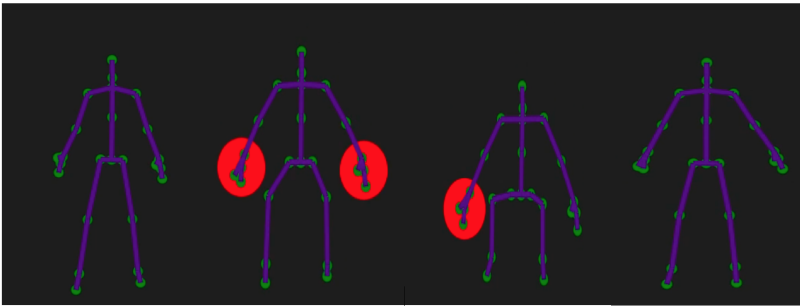

As robots have become more pervasive in our everyday lives, social aspects of robots have attracted researchers’ attention. Because emotions play a crucial role in social interactions, research has been conducted on conveying emotions via speech. Our study sought to investigate the synchronization of multimodal interaction in human-robot interaction (HRI). We conducted a within-subjects exploratory study to investigate the effects of non-speech sounds (natural voice, synthesized voice, musical sound, and no sound) and basic emotions (anger, fear, happiness, sadness, and surprise) on user perception with emotional body gestures of an anthropomorphic robot (Pepper).

The architecture, engineering, and construction (AEC) industry in the United States faces a scarcity of skilled labor workers. Previous efforts in academia and industry have investigated various approaches to improve worker productivity. Voice-based systems present opportunities to improve worker productivity, but their usability and applicability in unstructured and noisy occupational settings such as those inherent in construction projects has not been explored. This study describes a prototype Voice-based Intelligent Virtual Agent (VIVA) and evaluates its impact on construction worker productivity. A usability study was conducted involving 20 students to evaluate VIVA's support on workers' performance and cognitive workload. The results corroborated performance gains with VIVA. Results from workload demonstrates that intervention through voice assistance does not impose cognitive burden on workers. The proposed solution provides a novel use of voice-based agents, in a dynamic and noisy occupational setting, compared to the conventional use of voice assistants in stationary or structured settings.